“As someone who recognizes the potential of AI to solve many of the challenges we face in societies today, it is also essential to ensure that AI models are fortified against cyber threats. AI plays a significant role in modern ways of making decisions, making data and model integrity ever more important. For this reason, I think it’s important to consider the aspects of cyber security in relation to the development and use of AI models. Not only how to use AI for cyber security.

While it is impossible to cover every aspect of Cyber Security and AI within the limits of a single blog post, I intend to shed light on how I see the relevant cyber risks that AI faces. Here, I hope to contribute to the cyber security community by raising awareness of the importance of securing AI systems against cyber attacks.”

Common cyber security threats against AI

At Paliscope Analytics, we keep a close eye on the latest developments in Cyber Security and in the AI field. Including how AI is used for cyber security, how AI is used in various cyber attacks, and how cyber attacks can have AI models as targets. In this way, we better understand the kind of defence that organizations need to have in place to remain resilient.

Modern businesses that rely heavily on digital technologies have become highly vulnerable to cyber attacks against AI systems. Complex, interconnected ecosystems—including organizations’ digital value chains—present attractive targets for malicious actors who are seeking to cause significant disruption. In many cases, these actors target the critical components of these digital ecosystems to gain access to multiple organizations in so-called cyber supply chain attacks.

We see that the technologies enabling digital transformation and integrated systems within these ecosystems have been identified as particularly vulnerable to attacks. By exploiting a single technology vulnerability, malicious actors can inflict a wide-ranging cyber attack blast-radius, as seen in the Kaseya case (2021), where ransomware spreads on a large scale, affecting multiple organizations. As a result of the trends in cyber supply chain attacks, the broader cybersecurity community has recognized the urgent need for enhanced security measures and protocols that can help mitigate these risks to organizations.

“The cyber security risks associated with vulnerable AI systems are significant for all digitalized systems. Since so many decision-makers are drawing insights from data, and building intelligence thanks to AI models, we cannot underestimate the need for mitigating cyber-related risks in an organization and development processes.”

Compromising AI models can have devastating consequences for organizations regarding continuity. Organizations need to understand the risks associated with AI systems and take proactive measures to cyber secure them, especially those organizations in the front line of developing a million lines of source code.

The cyber risks associated with AI are significant for organizations. These risks can include data breaches, data manipulation, system downtime, and damage to an organization’s reputation. For example, malicious actors could instigate some of the following cyber attacks.

Types of cyber attacks against AI

- Data poisoning attacks: Data poisoning is one of the most common cyber security threats against AI systems. In this attack, cyber criminals manipulate the data used to train the AI model. By altering the training data, the attacker can influence the model’s decisions to either sabotage the system’s performance or benefit the attacker’s interests. (Source: OWASP 2023).

- Adversarial attacks: Another common threat is adversarial attacks. Adversarial attacks refer to the manipulation of an AI system’s input data to force the system to make incorrect decisions. For example, by manipulating the pixels in an image, an attacker can cause an AI system to misidentify an object or person.

- Model theft and tampering: Attackers may also try to steal an organization’s AI model or tamper with it. This could involve copying the model, modifying it, or inserting malicious code. This attack could have devastating consequences for an organization, mainly if the AI system is used in mission-critical applications.

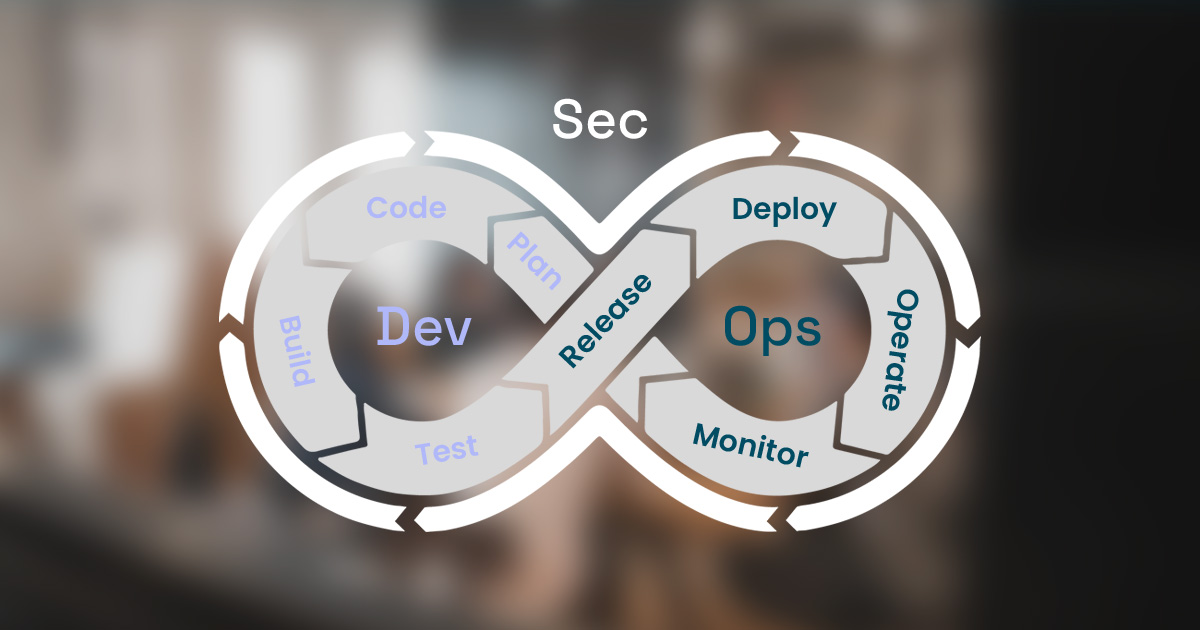

“… it’s crucial for organizations that develop AI systems to prioritize cyber-securing their entire DevOps.”

Cyber-securing AI

In addition to standard cyber security management and hygiene practices throughout an organization, it’s crucial for organizations that develop AI systems to prioritize cyber-securing their systems. By adopting DevSecOps, where “Sec” is added to “DevOps”, organizations can ensure that security is a key part of the design and development process. Conventional routines such as separate development, testing, and production environments are good practices. Other good practices include using frameworks such as OWASP and tools such as, but not limited to, SAST/DAST, IAST and RASP.

It’s also essential to ensure that the data for training the AI models is secured, and that sensitive data is avoided. For example, data security scientists could work together with the data science team to implement best practices for securing data.

Furthermore, it is crucial that organizations relying on suppliers to provide AI systems establish a third-party risk program, and a continuous monitoring routine, in order to ensure proper third-party risk management.

Stay one step ahead

Setting up an in-house security team takes time. Learn how our team of experienced cyber experts, political scientists, and data scientists can help safeguard your organization, either in the interim or long-term, through our continuous security monitoring services.

About Carina

Carina Dios Falk is a Cyber Security Analyst who leads R&D Analytics at Paliscope with a focus on Cyber Security, Data Science, and Artificial Intelligence. She advocates for the responsible use of AI and for the protection of AI models from cyber threats. Therefore, Carina not only explores the potential applications of AI for Cyber Security but also evaluates the aspects of posed cyber risks to AI.